- 15 Jan, 2026

- Artificial Intelligence

- By Musketeers Tech

How to Train ChatGPT on Your Own Data

“Can we train ChatGPT on our data?” Most of the time, the right answer is: you don’t retrain the model; you retrieve the right knowledge at the right time with the right permissions, then generate and cite. This guide walks you through what “training” really means in practice, when to use RAG vs fine-tuning, how to design a secure architecture, and how to measure success in production.

What “training ChatGPT on your data” actually means

In enterprise settings, “training” usually means building a retrieval layer and governance around the model, not changing the model’s weights. The real work is below the waterline:

- Retrieval-augmented generation (RAG): index your content, retrieve the most relevant chunks, and feed them to the model as context so it can answer with citations.

- Permissions and privacy: enforce role-based access control (RBAC), tenant isolation, and data minimization so the model only “sees” what a user is allowed to see.

- Evals and monitoring: track accuracy, safety, latency, cost, and drift; continuously improve chunking, prompts, and ranking.

Fine-tuning is powerful, but it’s best for stylistic preferences or pattern learning—not for encoding your entire knowledge base.

The practical ladder of options

From fastest to most control:

- Custom GPTs (file uploads): quickest way to experiment; great for small, static corpora and prototypes.

- RAG (API + vector store): the default for company knowledge; scales to growing content with citations and access controls.

- Fine-tuning (patterns): good for tone, formatting, or narrow task patterns; not a replacement for retrieval.

- AI agents (orchestrated tools): when you need multi-step workflows, actions, and deeper integrations with business systems.

When to use which

- Use Custom GPT uploads when you’re validating usefulness with a small doc set or personal workflows.

- Choose RAG when your knowledge updates frequently, must be permissioned, and needs sourcing.

- Fine-tune when you need consistent style or domain-specific task patterns (e.g., “convert ticket to SOP with our structure”).

- Build agents when answers must trigger actions: create tickets, update CRM, query analytics, or run approvals.

Quick comparison

| Approach | Time to value | Governance | Best for | Limits |

|---|---|---|---|---|

| Custom GPT (uploads) | Hours | Low | Prototypes, small teams | Weak permissions, version drift |

| RAG | Days–weeks | High | Company knowledge at scale | Requires infra + ops |

| Fine-tune | Weeks | Medium | Style/pattern learning | Not a KB replacement |

| AI agent | Weeks–months | High | Actions + workflows | Highest integration effort |

A reference architecture: RAG-first AI agent

At a high level:

- Ingest

- Connectors: Google Drive, SharePoint, Confluence, CRM, wikis, PDFs.

- Normalize formats to text/HTML; remove boilerplate and navigation.

- Chunk and embed

- Smart chunking (by semantic boundaries); add metadata (source, author, ACLs).

- Generate embeddings; store in a vector DB with text + metadata.

- Secure retrieval

- Filter by tenant and RBAC before vector search.

- Hybrid search: dense vectors + keyword/metadata filtering.

- Compose prompts

- Stuff retrieved context with clear system instructions: cite sources, refuse if not confident, respect privacy.

- Generate and cite

- The model answers and includes citations or “no answer” when uncertain.

- Optional tools/actions

- Integrate tools: database queries, ticket creation, CRM updates, analytics.

- Logging, evals, and monitoring

- Capture prompts, retrieved docs, outputs, latency, and costs.

- Human-in-the-loop review for sensitive flows.

Minimal RAG pseudo-implementation (Python)

# pip install openai chromadb tiktoken

import os, time

import chromadb

from openai import OpenAI

client = OpenAI()

# 1) Ingest and chunk (simplified)

docs = [{"id": "kb-1", "text": "Return policy: 30 days...", "acl": ["sales", "support"], "source": "kb/returns.md"}]

# 2) Embed + store

chroma = chromadb.Client()

collection = chroma.create_collection("kb", metadata={"hnsw:space": "cosine"})

def embed(texts):

res = client.embeddings.create(model="text-embedding-3-large", input=texts)

return [d.embedding for d in res.data]

for d in docs:

collection.add(ids=[d["id"]], documents=[d["text"]], metadatas=[d])

# 3) RBAC-filtered retrieval

def retrieve(query, user_roles):

# Filter by access roles in metadata

results = collection.query(

query_texts=[query],

n_results=5,

where={"acl": {"$in": user_roles}}

)

return [doc for doc in results["documents"][0]]

# 4) Compose and generate with citations

def answer(question, user_roles):

context_docs = retrieve(question, user_roles)

context = "\n\n".join([f"[{i+1}] {d}" for i, d in enumerate(context_docs)])

prompt = f"You are a helpful assistant. Cite sources as [1], [2].\n\nContext:\n{context}\n\nQuestion: {question}"

chat = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}],

temperature=0.2

)

return chat.choices[0].message.content

print(answer("What is our return policy?", user_roles=["support"]))Notes:

- Replace Chroma with your preferred vector store (Postgres/pgvector, Pinecone, Weaviate, OpenSearch).

- Enforce tenant and RBAC filters at query time. Never rely on the model to “remember” permissions.

- Cache embeddings and responses to reduce cost.

Security and compliance by design

Layer security like a real app:

- Data classification: tag public/internal/confidential/regulated. Drive routing and masking rules from labels.

- RBAC + tenant isolation: authorization happens before retrieval; include ACLs in metadata and filters.

- Encryption + secrets: encrypt at rest and in transit; store keys and provider tokens securely (KMS/Secrets Manager).

- Audit logs + retention: log queries, retrieved docs, and outputs for investigations; apply retention requirements.

- PII/PHI hygiene: redact where necessary; apply data-loss prevention and policy-based refusals in prompts and middleware.

- Human-in-the-loop: require approvals for high-risk actions or content changes.

Implementation checklist

- Define goals: deflection rate, time to first response, sales enablement speed, SOP compliance.

- Inventory sources: what’s in scope (wikis, PDFs, CRM, tickets) and what’s out.

- Map permissions: users, groups, tenants, and sensitivity classes.

- Choose stack: vector DB, embedding model, orchestration, hosting, observability.

- Ingest and normalize: connectors, ETL, chunking, metadata schema, ACLs.

- Retrieval strategy: dense + sparse hybrid, reranking, freshness signals.

- Prompting: instructions, answer format, citation style, refusal and escalation behaviors.

- Evals: golden datasets, accuracy and safety harnesses, regression tests in CI.

- Monitoring: latency, cost, token usage, hallucination flags, feedback loops.

- Rollout plan: pilot, shadow testing, staged access, training, documentation.

Cost, latency, and quality trade-offs

- Embeddings

- Larger embedding models increase recall but cost more. Start with a strong baseline and test.

- Chunking matters more than you think: semantic boundaries and overlap improve relevance.

- Retrieval

- Hybrid search (BM25 + vectors) boosts recall on short queries and jargon.

- Use reranking on top 20–50 candidates for better precision.

- Generation

- Smaller, faster models plus good retrieval often beat larger models without retrieval.

- Constrain output: target format, max tokens, and deterministic temperature.

- Caching

- Embed once, reuse often. Answer cache for frequent queries cuts cost and latency dramatically.

Fine-tuning vs RAG vs agents: a quick decision path

- Do you need to answer questions from a changing knowledge base with citations and permissions? Start with RAG.

- Do you need consistent style or task patterns from a fixed prompt? Consider fine-tuning.

- Do you need to take actions across systems or multi-step workflows? Orchestrate an agent with tools.

Measuring success: evals and monitoring

Track these metrics:

- Helpfulness: exact match and semantic similarity to reference answers.

- Grounding: citation correctness and source coverage.

- Safety: refusal rates on restricted topics; PII leakage attempts blocked.

- Latency and cost: P50/P95 latency by step; cost per resolved query.

- Retrieval quality: recall@k, MRR, and reranker gains.

- Human feedback: thumbs up/down, issue tags (irrelevant, stale, unsafe).

Keep a golden set of annotated Q&A. Run regression evals on every schema, chunking, or model change.

Common pitfalls to avoid

- Encoding all knowledge via fine-tuning. Use RAG for knowledge, fine-tune for patterns.

- Skipping permissions in retrieval. Enforce RBAC and tenant filters early and always.

- Over-chunking or under-chunking. Tune size and overlap with evals.

- No “I don’t know.” Explicitly allow abstention with escalation.

- Ignoring freshness. Add timestamps and boost recent content where relevant.

- No product owner. Treat this like a real product with backlog, SLAs, and KPIs.

Tools and stack suggestions

- Vector stores: Postgres/pgvector, Pinecone, Weaviate, OpenSearch, Milvus.

- Orchestration: LangChain, LlamaIndex, LangGraph for agents; simple frameworks work too.

- Embeddings: OpenAI, Cohere, Voyage, or domain-specific models; test recall and cost.

- Reranking: Cohere, Voyage, or open-source cross-encoders.

- Models: GPT-4 class for highest quality; smaller models for cost-sensitive, cache-heavy paths.

- Observability: Arize Phoenix, LangSmith, human review dashboards.

When to bring in experts

If you need a secure, governed AI assistant that integrates with your systems and respects your access controls, a specialist team accelerates time-to-value and de-risks production. We build RAG-first assistants and agents with enterprise security, evals, and monitoring baked in.

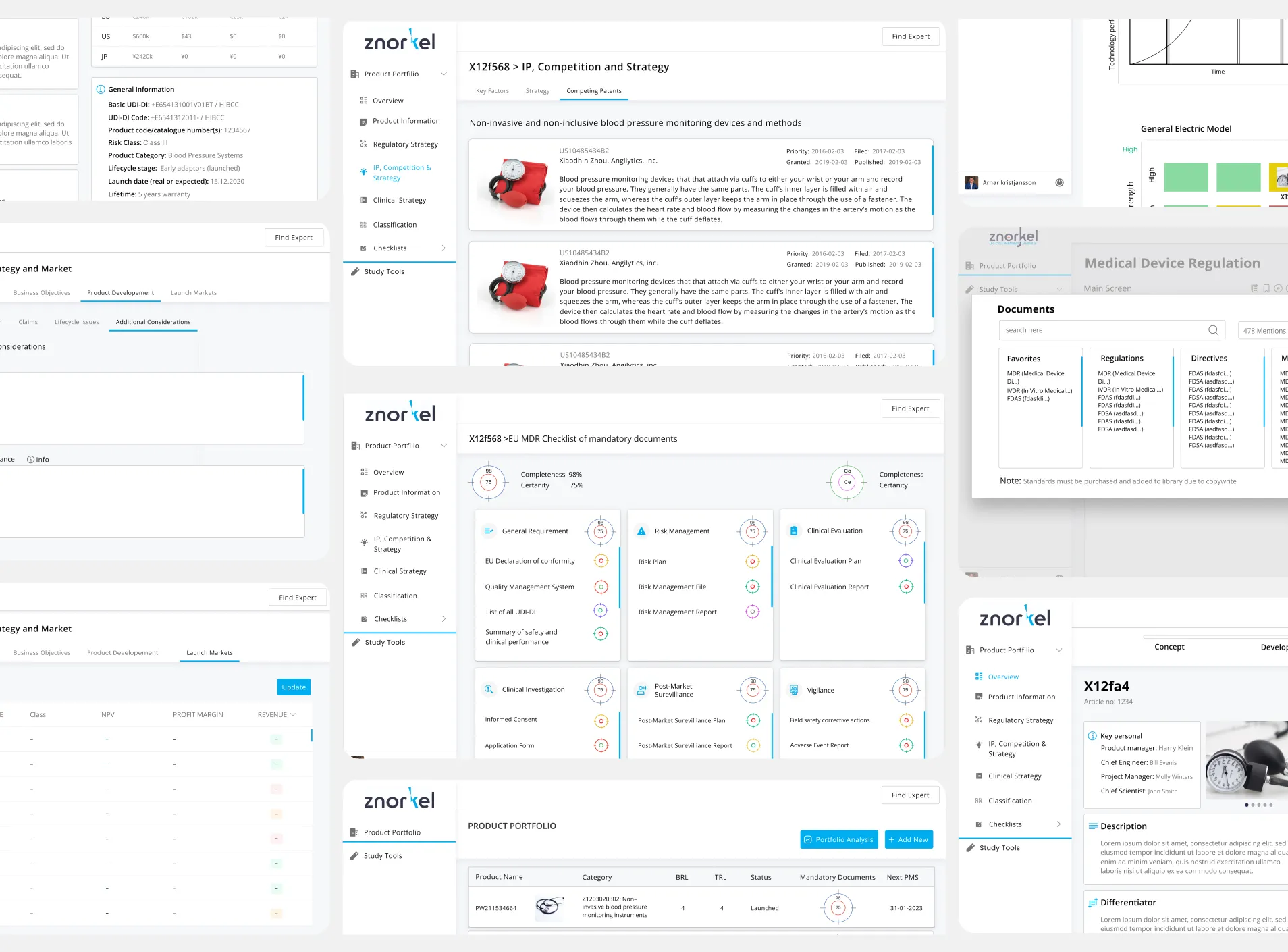

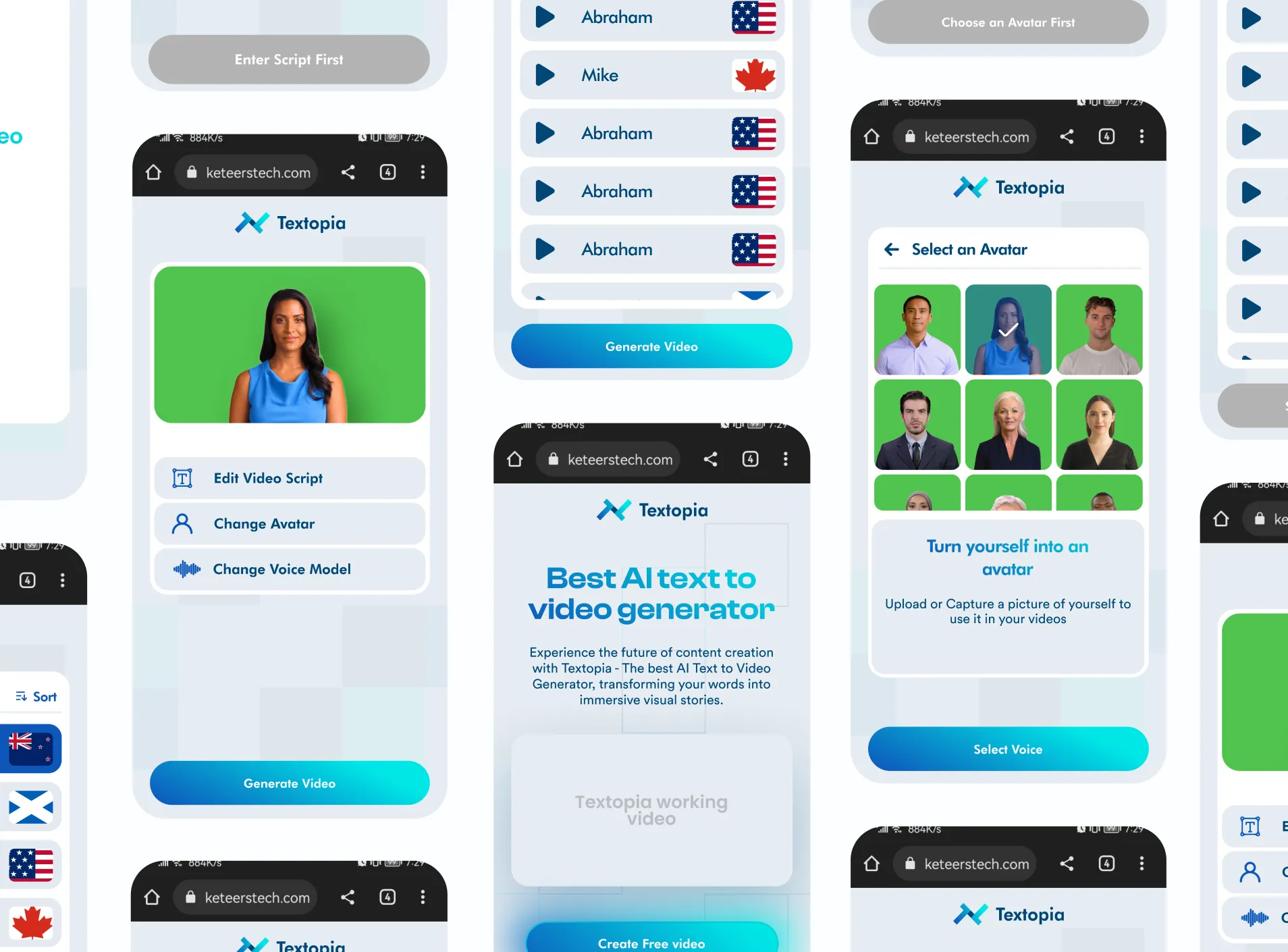

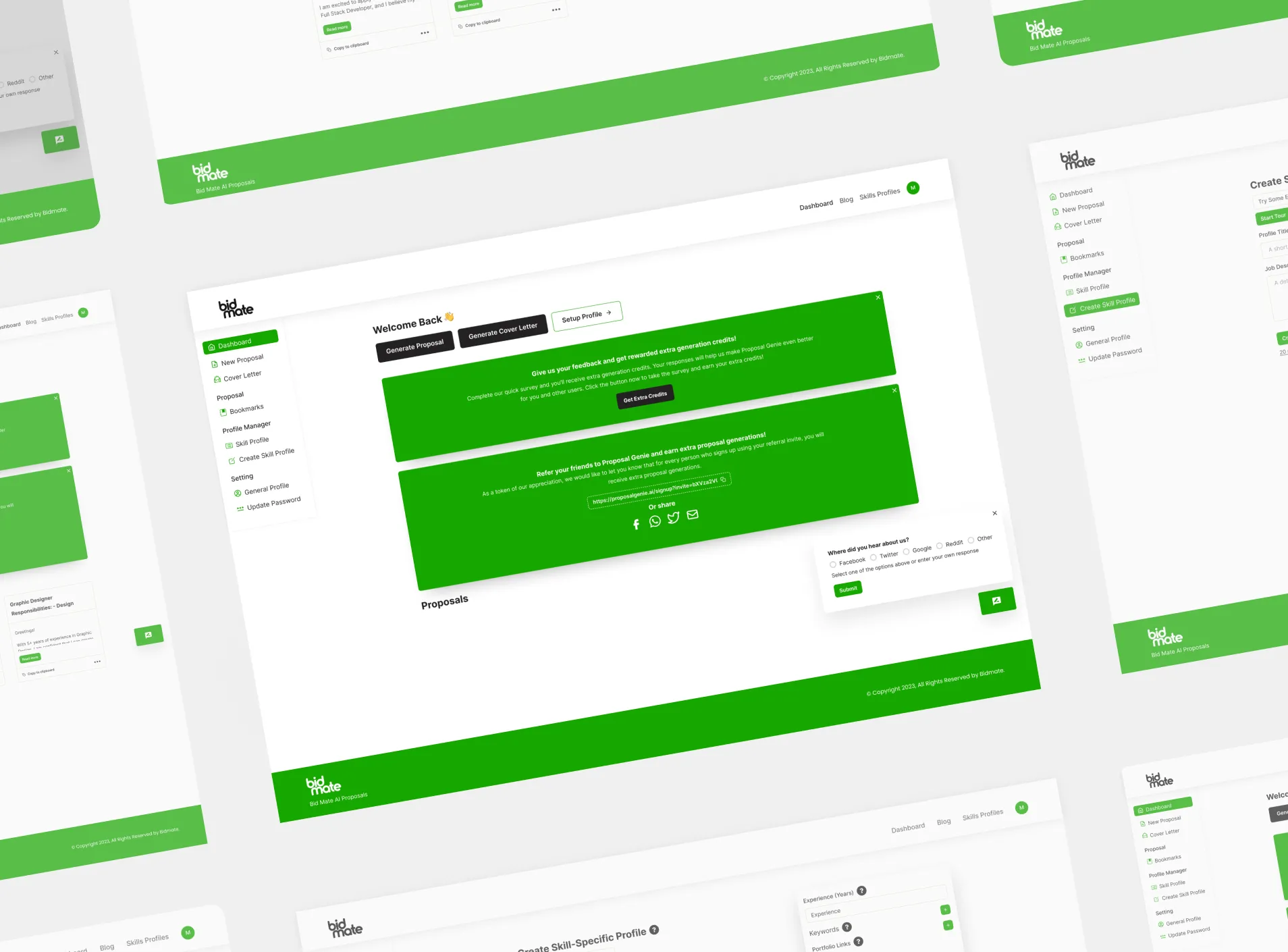

- Explore our AI services: Generative AI Application Services, AI Agent Development, and CTO as a Service.

- See how we’ve delivered results: browse our portfolio and related insights like How to Train ChatGPT and AutoGPT vs ChatGPT.

Frequently Asked Questions

Usually no. Start with RAG to retrieve relevant, permissioned context. Fine-tune only for stable patterns or style.

Conclusion

Training ChatGPT on your own data is mostly about retrieval, permissions, and product discipline—not retraining the model. Start with a clean RAG pipeline, layer security like any production app, measure relentlessly, and evolve toward agents only when you need actions and workflows. Done right, you’ll ship a useful, safe assistant quickly—and you’ll keep improving it with real-world feedback.

- ChatGPT

- AI customization

- business AI

- data privacy

- machine learning

- generative AI

- API integration

- enterprise AI

.BqrL9sgF_1jVnGp.webp)